Edge Computing Software is redefining how organizations respond to real-time data, empowering applications to act with speed and precision at the edge rather than waiting for distant cloud cycles, and it is becoming a cornerstone of modern digital infrastructure. By embracing an Edge computing architecture that spans devices, gateways, and regional nodes, teams can partition workloads, push compute closer to data sources, optimize resource utilization, and scale capacity fluidly as demands shift across environments. This shift not only reduces latency but also enables more deterministic responses, ensuring critical workflows—from predictive maintenance on factory floors to real-time sensor fusion in autonomous systems—stay synchronized as data streams grow, and it supports graceful degradation, offline analysis, and local decision-making when connectivity is momentarily unavailable. Local processing becomes a practical reality when data is analyzed near its source, trimming bandwidth usage, supporting offline operation, strengthening regulatory compliance by keeping sensitive information close, and enabling resilient operation in environments with intermittent connectivity. Across industries, robust deployment models, thoughtful data governance, and scalable architectures help ensure that distributed edge ecosystems remain resilient, auditable, and secure as Edge Computing Software scales to larger fleets.

Viewed through an LSI perspective, Edge computing can be described as near-edge computing, decentralized data processing, and on-device analytics that keep decisions close to the source. A modern edge-native software stack emphasizes lightweight microservices, autonomous orchestration at the edge, and secure OTA updates to sustain performance amid a distributed fleet. Techniques such as on-device inference, model quantization, and federated learning illustrate how Edge AI deployment can happen without funneling raw data to a central data center. Together with robust edge security and data locality, these approaches help maintain governance and privacy while enabling scalable, resilient services across locations. The broader takeaway is a cohesive platform that blends edge intelligence with cloud-backed insights to support urban, industrial, and rural deployments without sacrificing control or security, and as a result, Edge Computing Software scales to larger fleets.

Edge Computing Software for Low Latency and Local Processing

Edge Computing Software brings compute closer to data sources, dramatically reducing round-trip time and enabling local processing on edge devices. This approach leverages a distributed edge computing architecture to keep critical data near its source, delivering low latency and improved responsiveness for time-sensitive tasks.

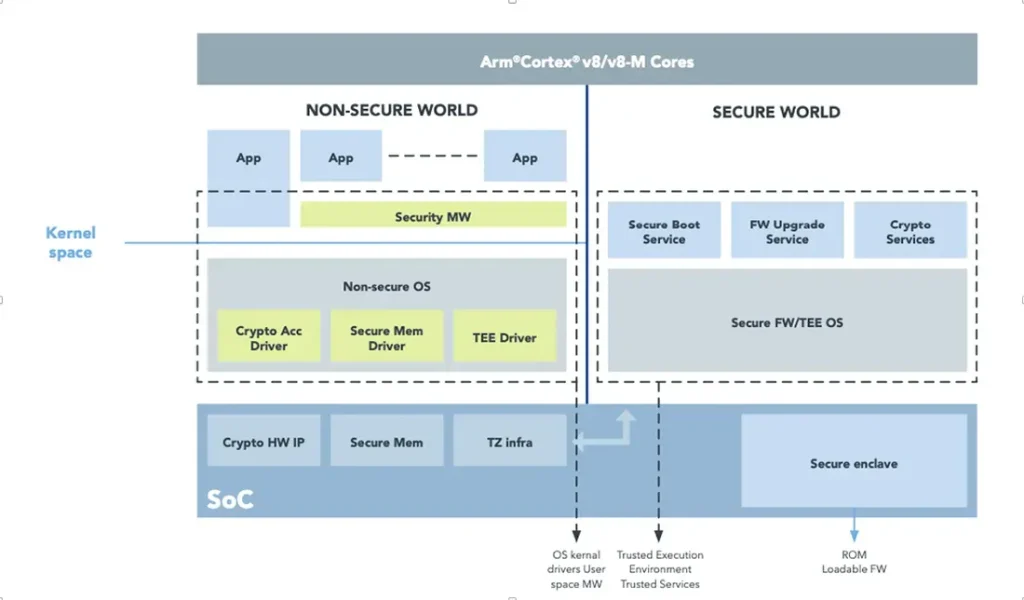

For Edge AI deployment, models can run at the edge, providing real-time inference while reducing bandwidth and cloud dependency. The software stack should be lean and capable of utilizing hardware accelerators, while embedding Edge security practices—secure boot, trusted execution environments, and strong authentication—to protect the edge surface.

Edge Security, Observability, and Management in Edge Deployments

Edge security is foundational to a trusted edge computing software stack. With a distributed perimeter, implement device identity and certificate-based authentication, encryption in transit and at rest, and a secure software supply chain to prevent tampering across the edge ecosystem.

Observability and centralized management are essential for scale. Lightweight edge orchestration, reliable OTA updates, and comprehensive telemetry enable rapid troubleshooting and optimization. By maintaining observability at the edge alongside a robust edge computing architecture, teams can monitor latency, reliability, and data quality both locally and in the cloud.

Frequently Asked Questions

How does Edge Computing Software optimize low latency and local processing within edge computing architecture?

Edge Computing Software runs data processing close to the data source, delivering low latency and enabling local processing on edge devices or gateways. It plays a central role in edge computing architecture by orchestrating distributed microservices, lean data paths, and near real time analytics while reducing bandwidth and keeping data residency local. Designing for efficiency, event driven processing, and hardware acceleration where available helps maintain fast response times across the edge.

What deployment and security considerations should be applied in Edge Computing Software to support Edge AI deployment and robust Edge security?

Key considerations include: deployment patterns, use distributed microservices and lightweight edge orchestration to scale across sites; secure software lifecycle with CI CD and OTA updates to safely push verified edge software; edge security with device identity and certificate based authentication, encryption in transit and at rest, secure boot, trusted execution environments, and a hardened software supply chain; edge AI deployment readiness with model optimization for the edge (quantization, pruning) and edge friendly runtimes; data governance and reliability by minimizing data movement, protecting privacy, and designing for intermittent connectivity with local state and offline operation.

| Aspect | Key Points |

|---|---|

| Introduction and Value | Edge computing software reduces latency by processing data near its source, enabling faster decision-making and new capabilities beyond cloud-only solutions. |

| Understanding Edge Computing Software | Defines applications and services that run on edge devices/gateways to process data locally, reducing bandwidth use and enabling offline operation. |

| Designing for Low Latency | Bring computation closer to data sources, optimize data paths, use incremental processing, design for concurrency and fault tolerance, and leverage hardware accelerators when available. |

| Local Processing and Data Locality | Prioritize data locality, maintain data residency, support offline/intermittent connectivity, and use stateful microservices with durable central storage when needed. |

| Edge Computing Architecture Considerations | Distributed microservices at the edge, edge orchestration, data funnels/hubs, and security-by-design to protect the edge perimeter. |

| Software Design Patterns for Edge Environments | Stateless services with distributed state, event-driven processing, edge data compression, and edge AI deployment considerations (models near data source). |

| Security and Compliance at the Edge | Device identity and access management, encryption in transit and at rest, secure software supply chain, and segmentation with least privilege. |

| Deployment, Management, and Observability | CI/CD for the edge, monitoring and telemetry, remote configuration, and comprehensive observability (traces, logs, metrics) across edge and cloud. |

| Case Studies and Real-World Applications | Manufacturing/predictive maintenance, retail experiences, transportation, and smart cities demonstrate latency-sensitive edge deployments. |

| Challenges and Trade-offs | Resource constraints, fragmentation, data governance, and reliability/maintenance require lean design, portability, and robust OTA/remediation. |

| Future Trends in Edge Computing Software | More AI at the edge, improved orchestration, privacy-preserving analytics, and 5G-enabled real-time applications. |