AI, Cloud, and Edge Computing are reshaping how organizations design, deploy, and scale software and services in every industry. When this triad brings AI closer to data sources while cloud platforms provide orchestration and training power, businesses unlock faster insights and more responsive experiences across customer journeys. This convergence creates a powerful stack that supports real-time inference, smarter decision-making, proactive automation, and new revenue streams across sectors. To capitalize on this triad, teams explore patterns like robust data governance and resilient architectures that balance latency, cost, and compliance. As devices generate massive data at the edge, new architectural patterns and governance practices are evolving to support distributed analytics and secure data sharing with partners.

From Latent Semantic Indexing (LSI) perspective, the topic can be described as intelligent systems distributed across a network, with edge-ward processing that complements centralized cloud services. This framing uses synonyms like edge-native analytics and distributed AI to signal related concepts to search engines. In practice, organizations deploy fog computing to balance latency and bandwidth, while keeping governance intact. By describing the approach alongside cloud computing trends, teams improve visibility and relevance. This LSI-informed vocabulary helps readers connect to broader ideas such as intelligent edge architectures and edge-cloud integration.

AI, Cloud, and Edge Computing: Unifying Real-Time Intelligence Across the Edge and Cloud

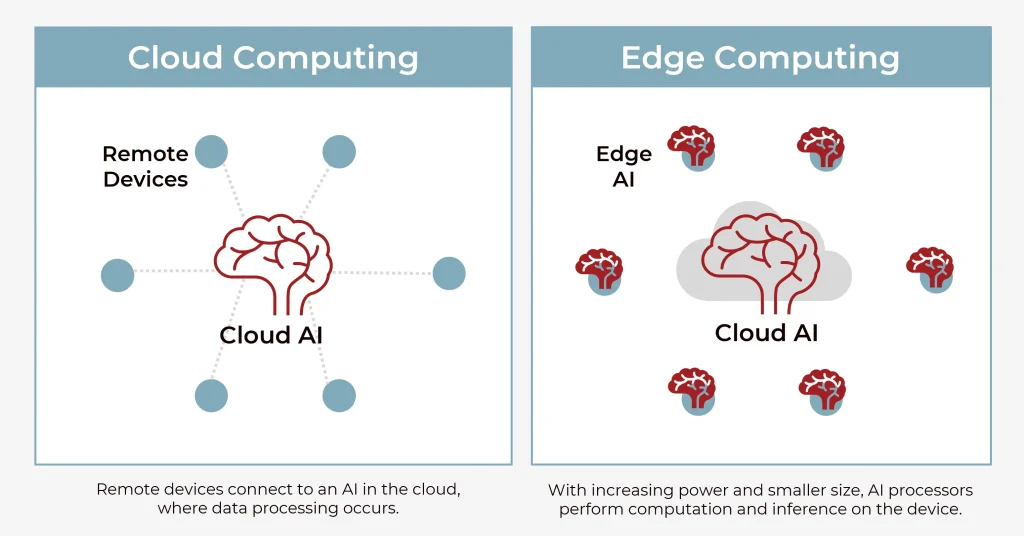

AI, Cloud, and Edge Computing are not mere buzzwords for the next decade; they form a converging technology stack that enables organizations to design, deploy, and scale software and services with greater speed and intelligence. By bringing AI capabilities to the edge—closer to data sources and users—businesses can unlock real-time insights while reducing latency, bandwidth costs, and data movement. This edge-aware approach sits atop cloud platforms that provide the scalable backbone and robust governance needed to train models, manage data, and orchestrate workloads at scale. In practice, the edge–cloud continuum supports a right-place/right-time pattern that balances immediacy with global analytics. AI at the edge further enhances responsiveness where it matters most, such as in industrial control or personalized experiences.

Real-time inference at the edge complements cloud-based AI training and analytics. The concept of AI at the edge brings models to data sources, enabling instantaneous decisions and offline operation when connectivity is limited. Large models trained in the cloud yield sophisticated predictions, while edge environments execute low-latency inferences and local decision-making. This synergy reduces data movement, lowers bandwidth costs, and strengthens privacy by keeping sensitive processing closer to the source. Fog computing can act as an intermediate layer that aggregates data from many edge nodes before forwarding summarized insights to the cloud, helping to optimize latency, bandwidth, and compute.

Strategically, this triad aligns with cloud computing trends toward modular services, interoperability, and secure, governed data. A well-defined multicloud strategy can distribute workloads across providers to optimize features and resilience while avoiding vendor lock-in. By aligning edge deployments with cloud-based governance, organizations can standardize data classifications, access controls, and audit trails, ensuring compliant movement of data across edge, on-premises, and cloud environments.

Designing a Multicloud Strategy with Edge and Fog Computing for Scalable AI Workloads

A practical multicloud strategy distributes workloads across diverse cloud providers to optimize services, pricing, and regulatory alignment while leveraging edge computing to deliver low-latency experiences. Edge devices handle real-time inference and local analytics, reducing dependence on central data centers and enabling offline operation in remote or bandwidth-constrained settings. Fog computing adds a scalable layer of intermediate nodes that perform local analytics and data aggregation, bridging edge devices and the cloud to balance latency, energy use, and throughput.

To succeed, organizations must unify data pipelines, security controls, and policy governance across clouds and edge infrastructure. Standardized APIs and open formats help ensure portability of AI models and data, while a robust observability layer provides end-to-end visibility into latency, data lineage, and intrusion attempts. A hybrid approach—training models in the cloud, pushing updates to edge devices, and employing federated or on-device learning when privacy is paramount—aligns with cloud computing trends and supports continuous improvement of AI at the edge.

Ultimately, a thoughtful multicloud design that includes fog computing and a strategic mix of edge inference and cloud training unlocks scalable, resilient AI capabilities. This architecture supports diverse use cases—from predictive maintenance in manufacturing to personalized customer experiences in retail—while maintaining governance, security, and compliance across distributed environments. By embracing AI at the edge within a broader cloud-first strategy, organizations can respond rapidly to changing workloads and regulatory requirements without sacrificing control or performance.

Frequently Asked Questions

How do AI at the edge and edge computing complement cloud computing trends within a multicloud strategy to deliver real-time insights?

AI at the edge enables real-time inference by running models near data sources, reducing latency and bandwidth usage. Edge computing brings compute closer to sensors and devices, enabling immediate decisions without round-trips to the cloud. Cloud computing trends—modularity, serverless architectures, container orchestration, and centralized model training—provide scalable storage, processing power, governance, and access to advanced AI services. A multicloud strategy allows organizations to select best-of-breed AI services and data processing across providers while mitigating vendor lock-in and improving resilience. Key practices include defining latency budgets, building secure data pipelines, and maintaining a feedback loop: edge-generated insights inform cloud model updates, and updated models are pushed back to edge devices for improved accuracy. In short, AI at the edge and edge computing complement cloud computing trends by enabling right-place/right-time processing in a governed, scalable multicloud environment.”

What is fog computing’s role in AI, Cloud, and Edge Computing architectures, and how does it support a scalable multicloud strategy?

Fog computing distributes processing across intermediate nodes between the edge and the cloud, enabling local analytics at regional sites, aggregating data streams from many devices, and forwarding summarized insights as needed. This reduces latency, lowers bandwidth requirements, and improves resilience in environments with intermittent connectivity. In AI, Cloud, and Edge Computing architectures, fog nodes can preprocess data, perform regional inferences, and coordinate with cloud training and global analytics. When combined with a multicloud strategy, fog computing provides a scalable layer that balances workloads across sites and providers, enhances data locality, and supports consistent governance and security across the stack. It is especially valuable in industries like manufacturing or utilities, where distributed, low-latency processing complements centralized AI training and edge inferences while maintaining visibility across the full architecture.”]}]} }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{ } }{

| Aspect | Key Points |

|---|---|

| Introduction |

|

| The Core Pillars |

|

| Data Workflow Pattern |

|

| AI at the Edge |

|

| Cloud Backbone |

|

| Edge Latency Advantage |

|

| Fog Computing |

|

| Strategic Implications |

|

| Implementation Roadmap |

|

| Use Cases Across Industries |

|

| Challenges |

|

| Future Outlook |

|

Summary

AI, Cloud, and Edge Computing together form a powerful framework for building resilient, scalable, and intelligent digital systems. By strategically distributing workloads across edge devices and cloud environments, organizations can achieve real-time insights, lower latency, and more flexible data governance. The path to success involves careful workload assessment, phased implementation, and a strong emphasis on security, interoperability, and governance. As technology evolves, a well-planned AI, Cloud, and Edge Computing strategy will be a competitive differentiator, enabling organizations to move faster, make smarter decisions, and deliver exceptional value to customers.